In a perfect world, higher resolutions, expanded color, and enhanced contrast would be no issue for existing copper cables. Unfortunately, in reality, physics limits the distance that copper cables can transmit real 4K signals.

While short distances are OK, copper HDMI cables have signal integrity problems over longer distances and IP-based solutions require compression, which introduces different types of problems for 4K video.

Compression to the Rescue?

The IT industry developed data compression to reduce the amount of data for transmission. If the data rate is sufficiently reduced, then the copper wire/distance problem goes away.

There are two types of compression:

- Lossless compression uses statistical redundancy to shrink the data without losing any of the original information. The amount of reduction is limited by statistical “rules” and is usually small.

- Lossy compression loses information deemed to be less “important” to achieve a greater size reduction. “Visually lossless compression” is a confusing marketing term for a type of lossy compression that loses original video information permanently.

Compression requires computational effort and time to crunch data into the smallest package, and generally, the more time and processing, the better it looks. That is why the compression used by broadcasters can be very good.

Video extension solutions have neither the processing horsepower nor the time (latency) to do compression the same way, so video quality can suffer.

Compression can also interfere with and often renders inoperable proprietary enhancement technologies such as Dolby Vision.

Side-by-Side Comparisons

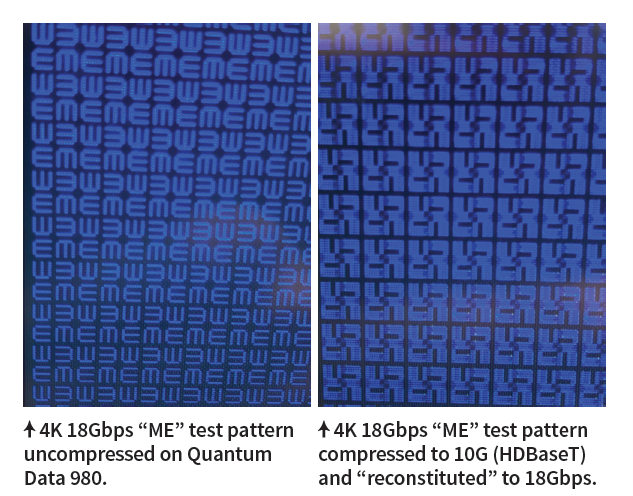

Seeing the negative effects of compression is dependent upon the content, and most people have only seen 11Gbps 4K content being compressed down to 10Gbps. The effects of this “light” compression can be hard to see.

However, when a side-by-side comparison is done using real 18Gbps 4K content, the issues with compression (banding, discoloring) are clearly visible. In fact, these issues are hard to “unsee” once someone is exposed to them.

Compression is simply unnecessary with most fiber solutions, but beware, there are cases where fiber solutions still require compression.

Fiber systems/switches that use a single 10Gbps SFP+ datacom module still need to compress an 18Gbps signal in the same way a copper 10Gbps solution does. These systems have the exact same drawbacks as any 10Gbps copper system.

There are also IP-based systems that use internal chip sets (like HDBaseT) that are currently constrained to about 8Gbps. These systems will not deliver the original uncompressed signal from source to sink because they need to compress the signal to send it.

Particularly confusing are systems that are labeled and marketed providing 18Gbps when they have the same internal chip sets limiting them to only 8Gbps or 10Gbps, but then they “fill in” data at the receiver to mimic a true uncompressed 18Gbps signal.

There are cases where customers may not need the full capabilities of 4K. In the residential A/V market, many installers are recommending 4K optical solutions for the primary TV viewing areas of a home, but for other rooms use IP-based technologies like HDBaseT.

Like all technology transitions, the shift from copper to fiber won’t happen at once. The quality vs. compression debate will continue as A/V professionals grapple with tradeoffs.