When 4K resolution rolled out, naturally there was much excitement over the prospects of higher video quality. Not to be overlooked, however, as 4K Ultra HD sets became commonplace was the equally, if not more impactful innovation of High Dynamic Range (HDR). However, video industry luminary Joel Silver, founder of the Imaging Science Foundation (ISF) says “The biggest challenge with HDR is getting it to turn on.” There are several “flavors” of HDR, but here we’re focusing on one that can make a major impact on your video installations and has been around the longest, Dolby Vision.

A Brief Overview of Dolby Vision

Dolby Vision is the video companion to the company’s immersive object-based Atmos audio format. A quick history — back in 2007 Dolby Labs acquired Brightside Technologies, a Canadian company that had collaborated with the University of British Columbia to invent the HDR display.

By 2010 Dolby had perfected the technology, which it renamed Dolby Vision, and released a 4,000-nit Professional Reference Monitor (model PRM-4200).

This gained the attention of the Society of Motion Picture and Television Engineers (SMPTE), which in 2014 published the HDR standard called ST 2084 that became the springboard for other HDR formats including HDR10 and HDR10+.

In 2015 Dolby won a technical Academy Award for its reference monitor, and its contribution to the industry.

Fast forward to today and there’s been a few conversations on the CEDIA Community forum about technical issues related to getting Dolby Vision to work as expected.

These issues are most commonly HDMI-related, coming down to bandwidth and — further to Silver’s comments about turning it on — ensuring the metadata arrives intact.

The Tricky Part is the Metadata

What distinguishes Dolby Vision from other HDR formats is that it’s based on a 12-bit signal to effectively support the huge brightness and color range.

Dolby Vision is transmitted through HDMI in one of two ways, depending on system support. One option sends the signal through what is essentially an 8-bit 4:4:4 construct, with the compatible display extracting the 12-bit signal with its special Dolby signal processing.

The other method transmits at the native 12-bit stream, so it needs to be limited to 4:2:2 or 4:2:0. As such, 4K Dolby Vision at 30Hz is supported by 10Gbps HDMI and regular HDBaseT; and 18Gbps HDMI is required to support up to 60Hz.

The signal also requires metadata, which you can think of as acting like HDR’s instruction sheet, so the display knows what to do with the signal. Dolby Vision predates HDMI’s support for HDR, so Dolby had to figure out its own way to get the metadata from source to display. The solution was to embed it within either of the aforementioned streams.

The big challenge for the technology integrator is that the combination of a proprietary video encoding and embedded metadata makes it vulnerable to non-licensed equipment that could easily and unwittingly blow it up.

So here are some tips to consider when designing and connecting interoperable HDMI systems to support Dolby Vision, in no particular order.

How to Flip the Switch on Any Dolby Vision Setup

#1: Compatability

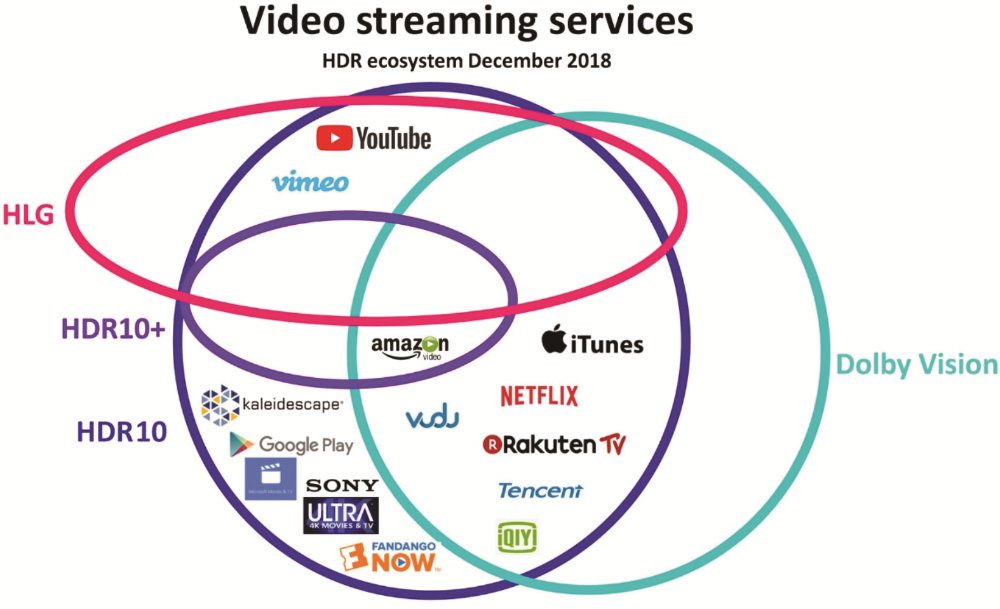

Ensure the content, source device and display are all Dolby Vision compatible. This may sound obvious, but just because it’s “HDR” doesn’t necessarily mean it supports Dolby Vision.My friend Yeori Geutskens in the Netherlands, who manages the Twitter handle @UHD4k, has created some excellent Venn diagrams to illustrate the state of HDR support among source devices, source content and displays (as of December/January).

Click here to view the Venn diagrams!

#2: App Awareness

Be app aware. More of the content consumed today is streamed. The likes of Netflix and Amazon have a growing library of Dolby Vision enabled content, and that is expected to increase in a big way.

To date, sometimes the apps onboard the TV needed to be used as external media players had some conflict between hardware and content. Fortunately, that’s starting to improve.

Again, refer to the Venn diagrams and you’ll see, for example, that Apple TV 4K and iTunes both support Dolby Vision. Same for Amazon Fire TV and Amazon Video. Until recently that wasn’t the case, but to the credit of those respective companies things are getting much better very quickly.

Now all that’s needed for your customers is a reliable Internet connection …

#3: HDMI Bandwidth

Be mindful of HDMI bandwidth. For connecting those external media players, or in-home content such as optical discs and gaming, HDMI is king.

As mentioned before, 10Gbps is enough to support Dolby Vision at 4K at 24Hz to 30Hz with 12-bit 4:2:0 or 4:2:2 color. This is a typical 4K Blu-ray movie. 18Gbps HDMI can support Dolby Vision up to 4K/60. HDMI 2.1 will unlock higher frame rates and variable refresh rate gaming.

#4: 4:4:4 No More

Don’t bother with 4:4:4 chroma. “4K 60 4:4:4” has been a popular moniker in A/V marketing for a few years now, used to denote 18Gbps HDMI support. However, it’s something of a misnomer in this era of HDR video, as conventional 4K/60 4:4:4 is limited to 8-bit color through HDMI 2.0.

Dolby Vision can use this as a carrier for its 12-bit goodness, but whether the result is 4:4:4 doesn’t really matter. It’s kind of a moot point with movies as the vast majority of titles are 24fps (frames per second). However, 4K gaming is best at 60fps, so color must be compromised to make it fit into the 18Gbps pipe.

It’s either 4:4:4 or HDR that has to go, and for me that’s a no-brainer. Xbox One X supports 4K/60 gaming with HDR, and a simple menu setting can subsample 4:4:4 down to 4:2:2 to enable HDR. The result will look much better.

By the way, pretty much all other sources we watch — TV, cable/sat, disc, media files, streamed content — are all in 4:2:0. Avoid setting sources to up-sample to 4:4:4 as it will only hinder bandwidth and generally not provide any other benefit. Stay native.

#5: To Extend or Not to Extend

Know the limits with HDBaseT extenders and/or switchers. Also, don’t try pushing formats that are beyond 10Gbps.

HDBaseT extenders and switchers that boast support for “4K 60 4:4:4” use some form of compression or what’s called color space conversion. The latter refers to RGB/4:4:4 to 4:2:0 conversion, but it may also down-sample 10- or 12-bit HDR signals to 8-bit.

This would crush the HDR signal, even if the metadata is kept intact. Most don’t. Native HDBaseT supports Dolby Vision up to 10Gbps perfectly, as it’s a bit-exact passthrough technology.

#6: Scaling and Processing

Avoid ancillary devices that scale or process the video in any way. Because Dolby Vision uses proprietary encoding to manage the bandwidth load and optimize color rendering at the display, non-Dolby Vision equipped video processors or scalers generally would not know how to handle this. They would likely unwittingly mess up the signal and destroy the metadata.

The image needs to be sent to the display unadulterated with direct passthrough any in-line devices.

#7: On-Screen Displays

Don’t expect on-screen display (OSD) to work with Dolby Vision content. That goes for displays such as from an A/V receiver.

For the reason stated in the previous point, an AVR to render an OSD on Dolby Vision content would need to render said OSD in Dolby Vision as well … which is too hard, so no OSD it is.

#8: Compression

Don’t fear compression, but tread with caution. Compression codecs have advanced a lot, and will continue to in the coming years. What differentiates good from not-so-good compression is that any latency or image quality compromise are noticeable.

Most compression systems over HDMI, HDBaseT and A/V-over-IP can’t support Dolby Vision. However, some very nice solutions can legitimately de-embed Dolby Vision metadata, compress the retained 12-bit signal to fit over a 1-gig network, and do it all with only single-digit milliseconds of latency. But uncompressed is still always best, if possible.

#9: Patience is a Virtue

Be patient. HDMI 2.1 will undoubtedly present us with some installation challenges, but once it gets going it promises to resolve many of the current limitations.

Folks at Dolby have told me that they won’t continue to embed their metadata once HDMI 2.1 kicks off, instead switching to the standardized dynamic metadata provided for in 2.1 (yep, HDMI has caught up to Dolby).

Also, the extra bandwidth could have your customers enjoying Dolby Vision content like gaming in true 4K/60 4:4:4, which needs 24Gbps, or 4K/120 or perhaps even 8K!

CTA, CEDIA Collaborating

There’s currently five top-level HDR formats — Dolby Vision, HDR10, HDR10+, HLG and Technicolor SL-HDR1, and each may have permutations therein.

It’s complicated, but it’s not really a “format war.” Dolby Vision and HDR10+ may be competitors, but HLG is a totally viable complement for broadcast, and HDR10 will remain the baseline fallback. As for Technicolor, it’s excellent but might have been a matter of poor timing.

So when it comes to Dolby Vision projects, keep Joel Silver’s advice in mind and be sure to “turn the HDR on.”

Keep your eyes out for a new recommended practice that CTA and CEDIA’s joint R10 volunteer committee is working on, called CEB28, for verifying HDMI distribution systems over alternative technologies.

Put that into practice, and if you aren’t already doing so, make sure you calibrate your clients’ displays to provide a proper foundation on which HDR can (and should) look absolutely stunning.