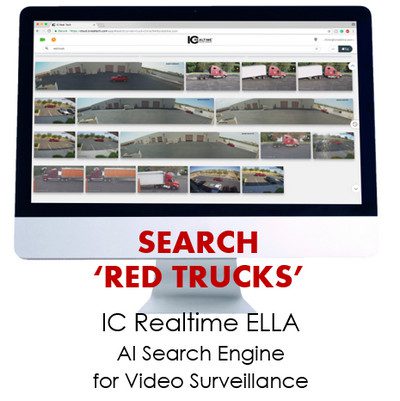

Say you have a week’s worth of surveillance videos and you just want to find that guy in the red shirt. Simply ask IC Realtime to show “red shirt,” and the company's new Ella AI system will scan the content and display relevant clips pretty quickly and accurately, judging by the demo at CEDIA 2017 in September. Likening the technology to “Google for video,” ICR will demonstrate the new service, based on the Camio visual AI engine, at a press event at CES 2018.

Camio was founded in 2015 by former Google executives, including CEO Carter Maslan, who delivered Local Search to Google Maps, Google Earth, Google Mobile and Web Search, as director of product management.

The company names a handful of commercial systems integrators and telematics companies as partners, but IC Realtime claims to be the first to incorporate the technology into cameras and video-recorders (NVRs) for commercial and consumer users.

IC Realtime, a favorite video-surveillance company among smart-home installers, should be the first camera company to introduce this type of video analytics and deep learning — including text recognition and object detection — to the channel.

ICR's implementation of Camio in new Ella 'Google for video' surveillance solution.

“Ella makes every nanosecond of video searchable instantly, letting users type in key words to find every relevant clip instead of searching through hours of footage,” according to a statement by IC Realtime (also known as IC Realtech).

Similar technologies might be available for large operations like airports and enterprises, “but they cost a ton of money,” said IC Realtime’s Brandon Dewitt at CEDIA.

Those types of facilities use loads of cameras, processors, video monitors, cabling infrastructure, personnel, and other resources — multiplied for redundant operation.

With Ella, however, “you could do this with just one camera,” Dewitt says.

The new consumer-friendly, cybersecure technology is deployed in a black box at the user's premises, as well as cloud-based servers that crunch more data after the edge device does its business. The AI engine compares recorded images to vast stores of digital content, and then tags and presents pertinent clips just seconds after a user types in a search term or utters a phrase aloud. Bad results? Give them them a thumbs down. Good ones? Thumbs up. Eventually the system learns what's important to users.

At CEDIA, Dewitt searched IC Realtime’s own surveillance footage to isolate “dog” and “red.” You could also ask for “red dog” or “opening a door” or “opening a red door.” The service also employs optical character recognition (OCR) to read signs, license plates and other text, seemingly undeterred by different fonts, angles, colors, flourishes and other distortions.

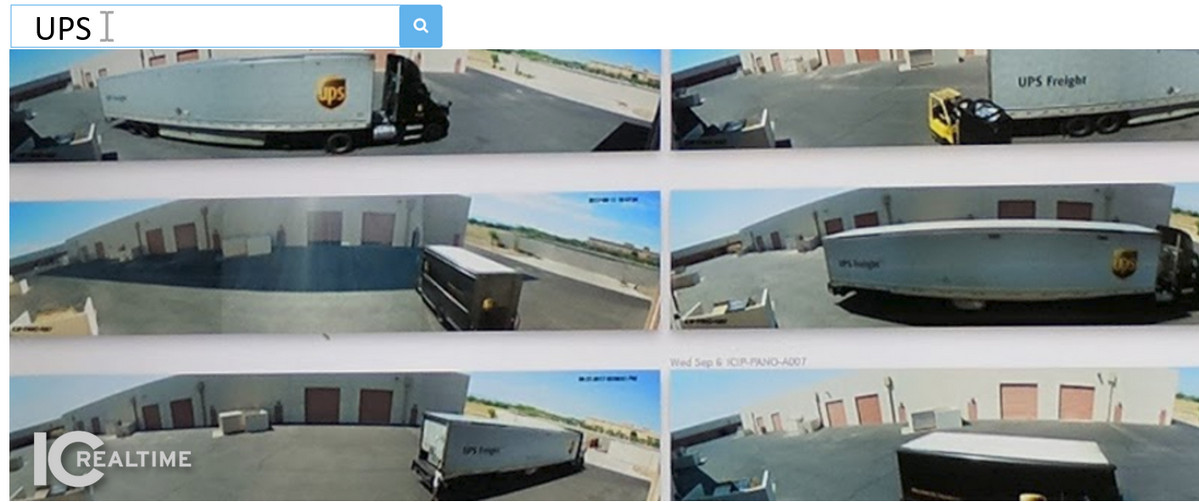

In searching “UPS,” for example, Dewitt explained Ella would actually read the text, not just compare images to other brown trucks labeled “UPS” online. Sure enough, the search identified a number of clips with each truck looking different than the next (image below).

Bandwidth and Storage Optimization

IC Realtime would prefer its own cameras be used in an Ella-enabled environment, but the system works with most industry-standard surveillance products. Ella discovers the cameras automatically, letting users determine how each should be treated to optimize bandwidth and storage.

Like most decent camera systems, ICR lets users map out zones, create alerts for activity in those zones, and trigger devices like floodlights via a compatible home-automation system or app. Adding that extra ingredient of object- and text-recognition provides a valuable piece of metadata. For example, “Text Mom if a man in a brown jacket approaches the front door.”

In all of this, Ella optimizes bandwidth and video storage with even more machine learning. Only content deemed “interesting” is stored in full resolution — not unlike the concept behind the new Google Clips camera.

Here's how ICR explains the technology, which is not unlike the services used

Bandwidth

Rather than pushing every minute of footage to the cloud, systems using Ella leverage interest-based compression to only record events in HD deemed ‘interesting’ by the AI learning platform. A deep learning neural network is constantly pinging the cloud to ask if the subject in the scene is interesting and if the cloud would like to see more of this event. All “uninteresting” events are still recorded and stored as low-resolution, time lapse so they may be viewed later but do not soak up valuable bandwidth.

Interest-based-compression

Complex scene analysis is what drives the engine behind Ella’s interest-based-compression. Cameras connected to the Ella cloud learn what is within each frame and then performs an analysis to determine if there’s adaptive motion – or motion that is habitual rather than random like a tree swaying in the wind and makes note to prioritize it to the user. Through this pattern recognition, the Ella cloud reduces false positives by understanding a tree blowing in the wind is not a notable event where as a delivery truck arriving onsite should be considered one.

Prices, Hardware, Specs, Details

More details about Ella, including hardware, prices and launch dates, will be revealed at CES.

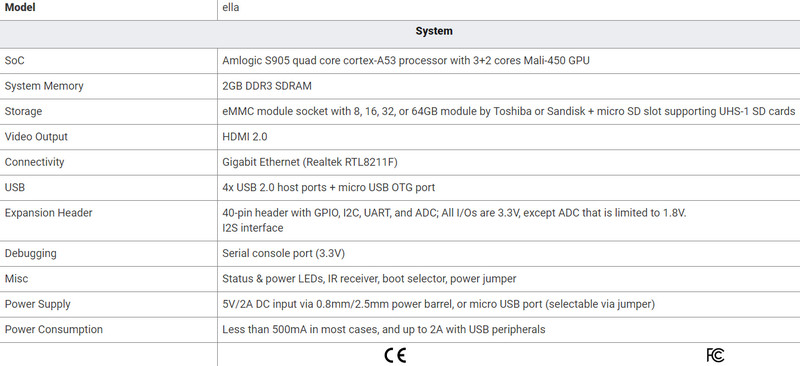

As opposed to the major hardware requirements of typical of enterprise-oriented visual-AI systems, ICR will implement Ella in a device as simple as a small Internet gateway with four USB ports, one HDMI output and an Ethernet port, with support for three cameras (according to preliminary specs of an Odroid box posted online). Additional hardware will be available for systems up to 1,000 1080p streams.

Prices for the hardware and services will be provided next week, but Camio itself charges as little as $9.90 per month for basic human and car detection, including unlimited video storage, video history, live streaming and unlimited guest us.

The full feature set starts at $19.90 per month for advanced object labeling, OCR, metadata indexing for on-premise video proxy server, and video-stream transcoding.

Other Developments in Visual AI

Video analytics has been a huge trend in surveillance over the past several years (CE Pro Trend #4, 2016), and object-detection is expected to be the next big subset of that. Today's systems can well distinguish between little people and big pets — and even judge their age, weight, gender and other characteristics — but they may not do so well isolating “male with a backpack” in a large crowd, at least not in a matter of seconds … when days of recordings are involved. And OCR seems to be a very rare feature in video analytics today.

The tools are coming fast-and-furious, though, at least for commercial applications.

In March 2017, Google announced the Google Cloud Video Intelligence API, which “uses powerful deep-learning models, built using frameworks like TensorFlow and applied on large-scale media platforms like YouTube,” according to the company. “The API is the first of its kind, enabling developers to easily search and discover video content by providing information about entities (nouns such as “dog,” “flower” or “human” or verbs such as “run,” “swim” or “fly”) inside video content.

Google's Cloud Video Intelligence API

Early deployments, however, are focused on pretty much everything except for consumer electronics. The API has been pitched to such places as “large media organizations and consumer technology companies, who want to build their media catalogs or find easy ways to manage crowd-sourced content,” Google explains. Like YouTube maybe? Cantemo is one early implementer, using the API for its next-gen media asset management (MAM) solutions.

Microsoft has been working on similar technologies at least since 2016, when its Azure Media Analytics group announced Video OCR.

“When used in conjunction with a search engine, you can easily index your media by text, and enhance the discoverability of your content,” Microsoft Azure Media Services program manager Adarsh Solanki wrote at the time. “This is extremely useful in highly textual video, like a video recording or screen-capture of a slideshow presentation.The Azure OCR Media Processor is optimized for digital text.”

Not surprisingly, Facebook is on an object-recognition tear, as well.

But the technology can't come to the consumer market fast enough. Even Google Photos does a pretty mediocre job of recognizing images, and you can forget about video. OCR would be especially nice for those of us with years' worth of tradeshow digitalia. Otherwise, it's awfully hard to find signs that say “Panja.”

Hoping to find some progress in object recognition, I did try a few things on Google Photos for the first time. Colors fared well. “Baby” did OK. “Blonde hair” about 50%. “Red hat” was a total miss but “blue shirt” did OK.

Even a search for “meal” did pretty well, showing steaks on a grill, people sitting around a table with plates, four almonds on a counter, and an onion ring.

“Sitting” did surprisingly well, pulling an 85-percent or so. At least google picked up the best of them — those guys at ISE sitting on the stairs next to the sign that says, “Sitting on the stairs prohibited due to fire regulations.” Yep, still funny.